Research Scientist at NVIDIA

Santa Clara, CA

Email: lastname+firstname+1225 at gmail.com

Email(Company): firstname+l at company.com

Google Scholar LinkedIn Twitter

|

Guilin Liu Research Scientist at NVIDIA Santa Clara, CA Email: lastname+firstname+1225 at gmail.com Email(Company): firstname+l at company.com Google Scholar LinkedIn Twitter |

Publications (Please refer to Google Scholar for the latest ones):

|

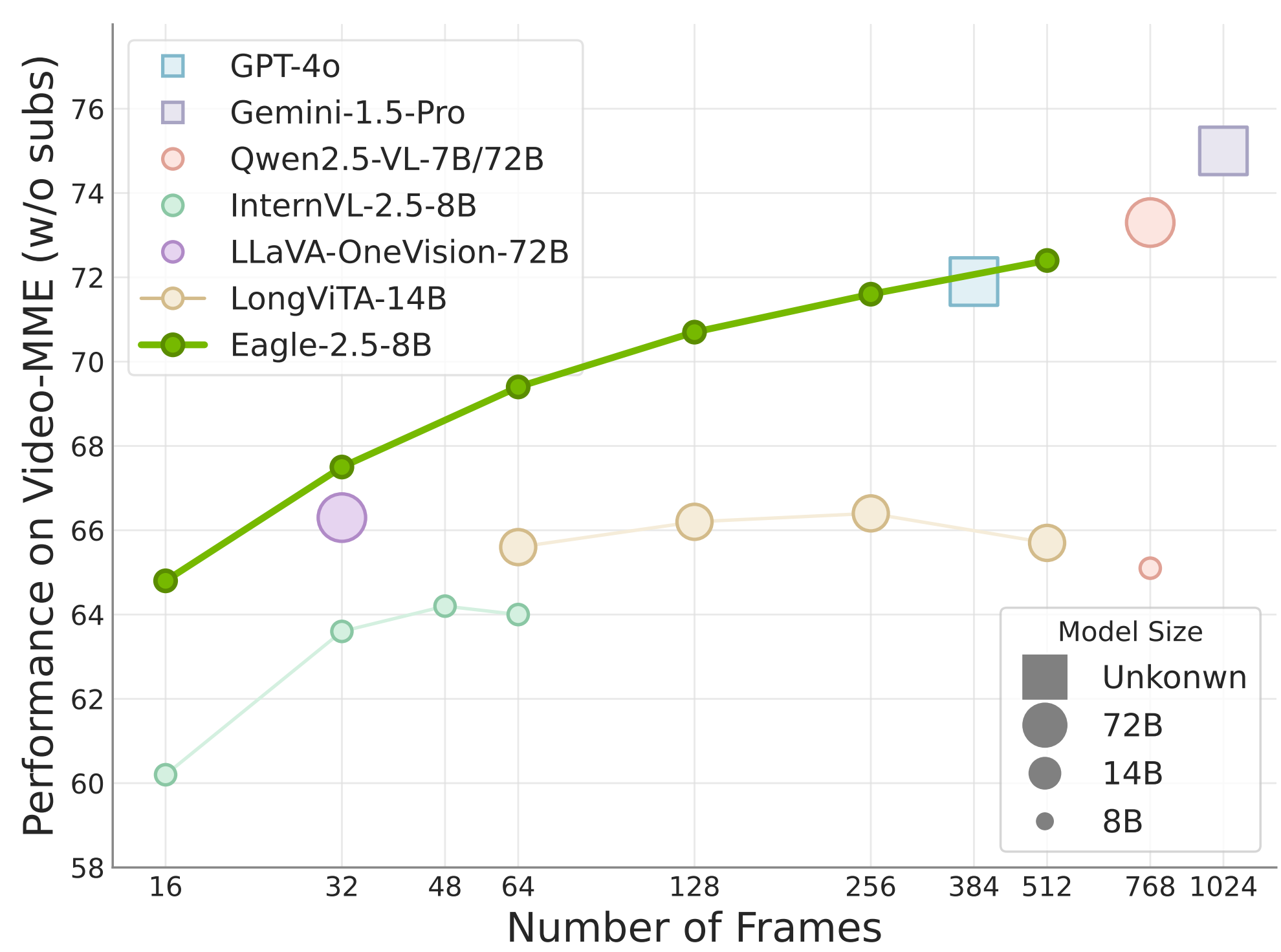

Eagle 2.5: Boosting Long-Context Post-Training for Frontier Vision-Language Models Guo Chen, Zhiqi Li, Shihao Wang, Jindong Jiang, Yicheng Liu, Lidong Lu, De-An Huang, Wonmin Byeon, Matthieu Le, Tuomas Rintamaki, Tyler Poon, Max Ehrlich, Tong Lu, Limin Wang, Bryan Catanzaro, Jan Kautz, Andrew Tao, Zhiding Yu, Guilin Liu* NeurIPS 2025 Paper Code Checkpoint (NVIDIA HugginFace) |

|

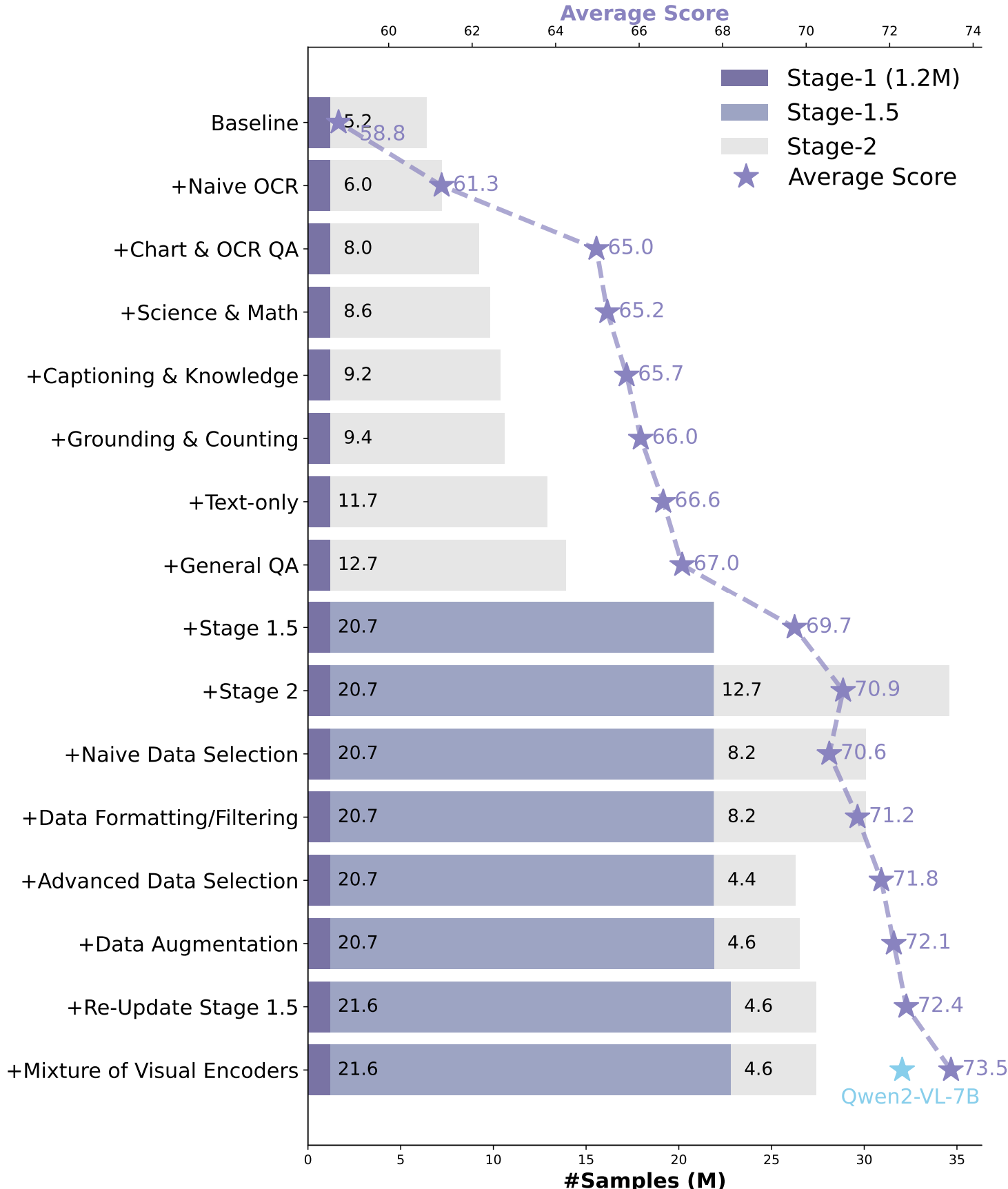

Eagle 2: Building Post-Training Data Strategies from Scratch for Frontier Vision-Language Models Zhiqi Li, Guo Chen, Shilong Liu, Shihao Wang, Vibashan VS, Yishen Ji, Shiyi Lan, Hao Zhang, Yilin Zhao, Subhashree Radhakrishnan, Nadine Chang, Karan Sapra, Amala Sanjay Deshmukh, Tuomas Rintamaki, Matthieu Le, Ilia Karmanov, Lukas Voegtle, Philipp Fischer, De-An Huang, Timo Roman, Tong Lu, Jose M Alvarez, Bryan Catanzaro, Jan Kautz, Andrew Tao, Guilin Liu*, Zhiding Yu* arxiv 2025 Paper Code |

|

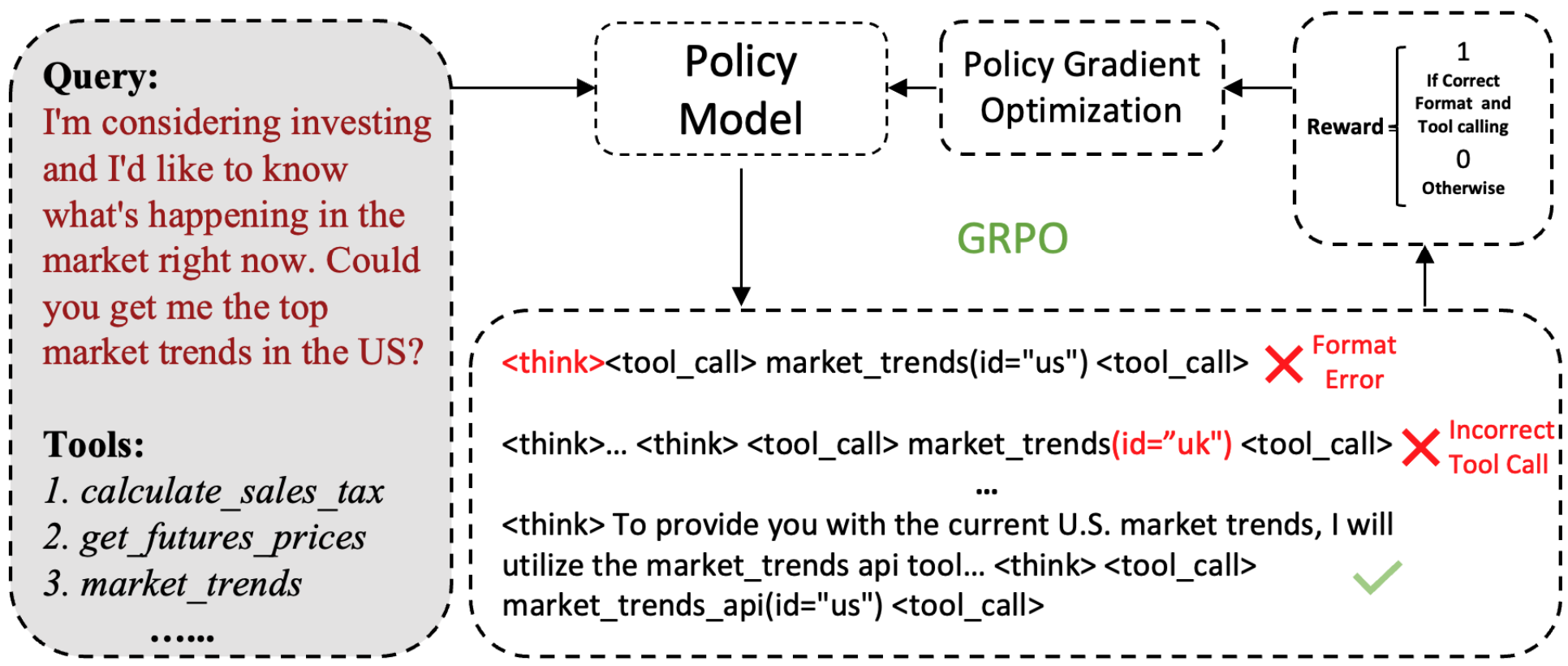

Nemotron-Research-Tool-N1: Tool-Using Language Models with Reinforced Reasoning Shaokun Zhang, Yi Dong, Jieyu Zhang, Jan Kautz, Bryan Catanzaro, Andrew Tao, Qingyun Wu, Zhiding Yu, Guilin Liu arxiv 2025 Paper |

|

EAGLE: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders Min Shi, Fuxiao Liu, Shihao Wang, Shijia Liao, Subhashree Radhakrishnan, De-An Huang, Hongxu Yin, Karan Sapra, Yaser Yacoob, Humphrey Shi, Bryan Catanzaro, Andrew Tao, Jan Kautz, Zhiding Yu*, Guilin Liu* ICLR 2025 Explore the vision encoder design for VLM models Paper Code |

|

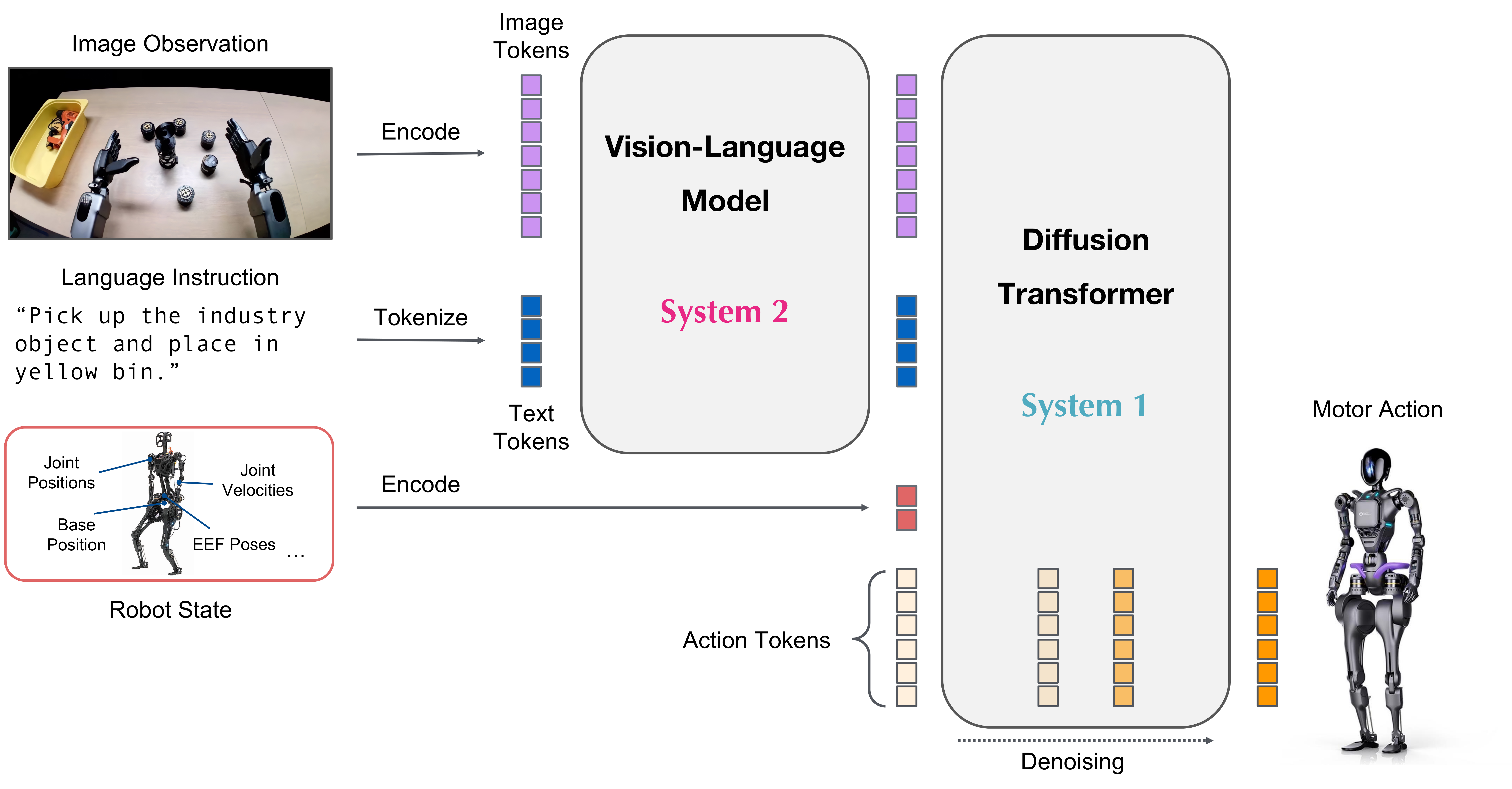

Gr00t n1: An open foundation model for generalist humanoid robots Johan Bjorck, Fernando Castañeda, Nikita Cherniadev, et al, Guilin Liu, et al, Yuke Zhu arxiv 2025 Paper Code Hugging Face Model |

|

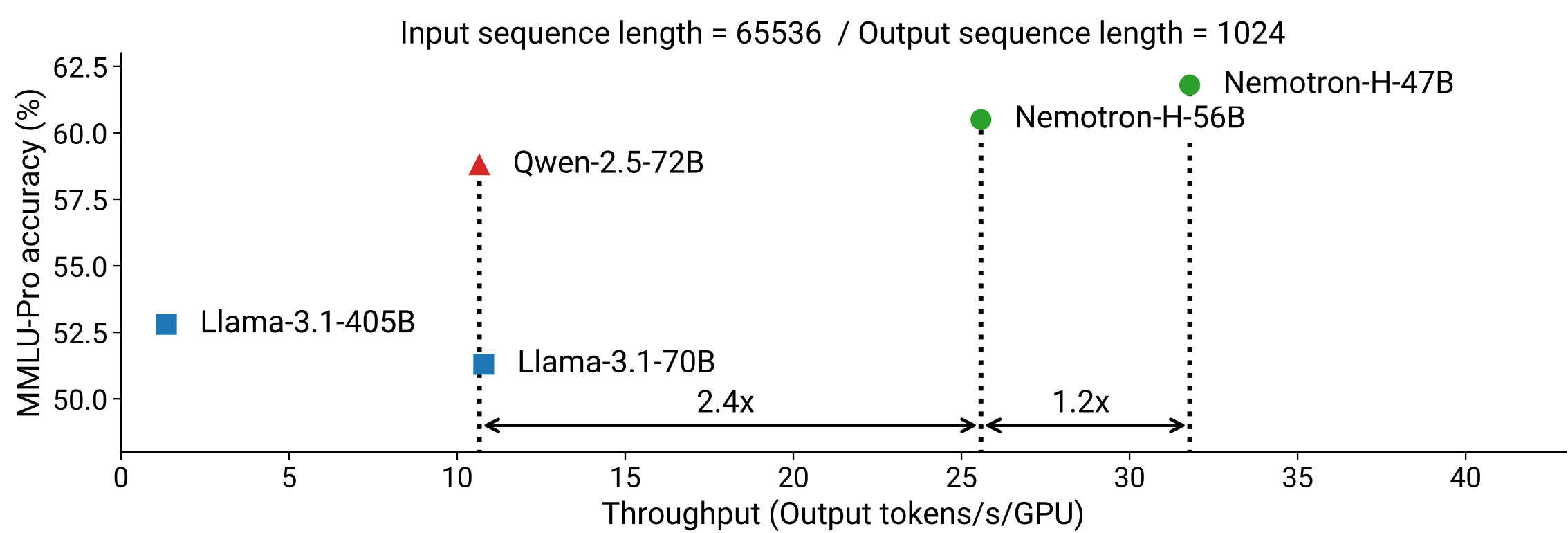

Nemotron-H: A Family of Accurate and Efficient Hybrid Mamba-Transformer Models Aaron Blakeman, Aarti Basant, Abhinav Khattar, et al, Guilin Liu, et al, arxiv 2025 Paper Project Hugging Face Model |

|

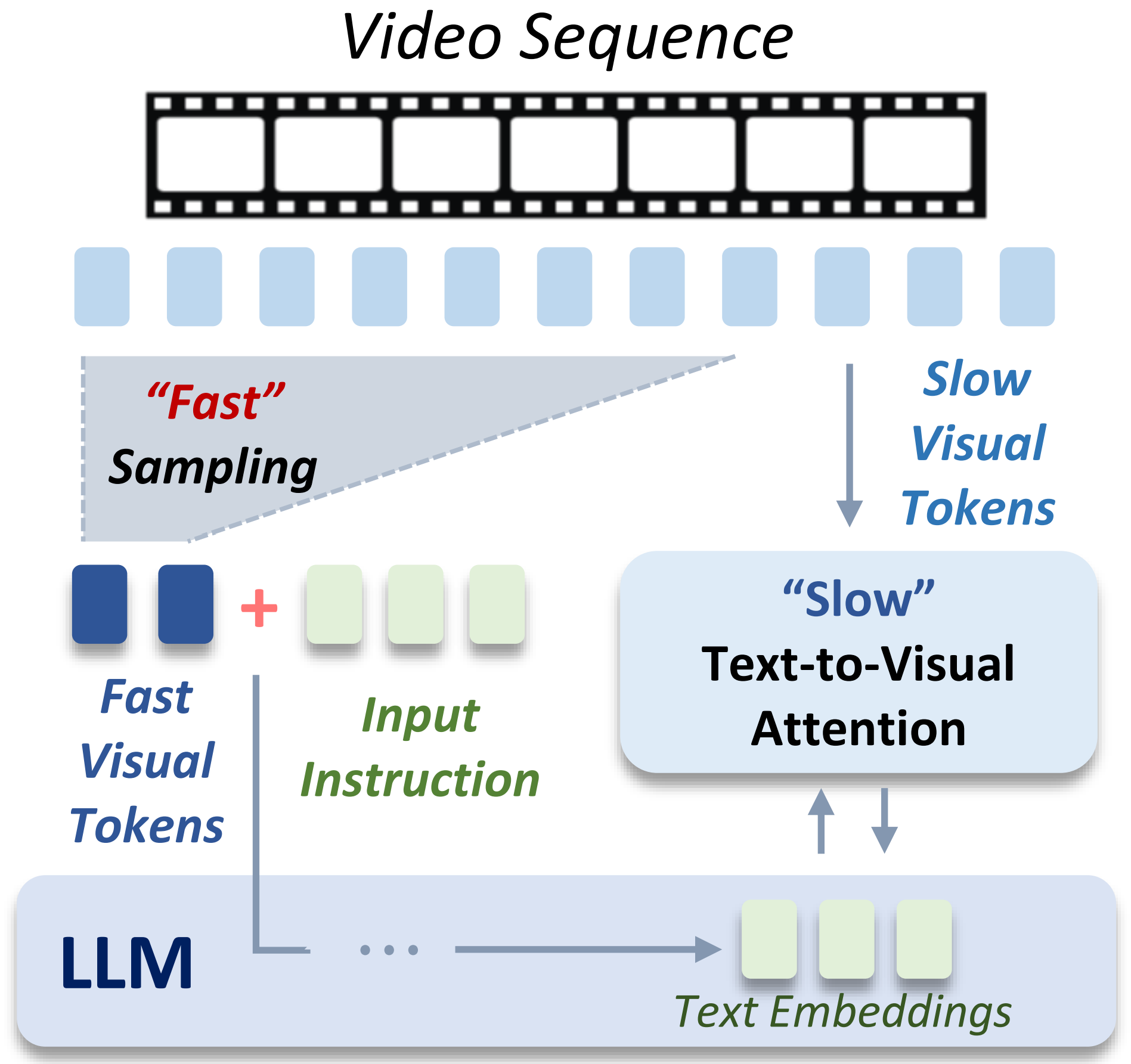

Slow-fast architecture for video multi-modal large language models Min Shi, Shihao Wang, Chieh-Yun Chen, Jitesh Jain, Kai Wang, Junjun Xiong, Guilin Liu, Zhiding Yu, Humphrey Shi arxiv 2025 Paper |

|

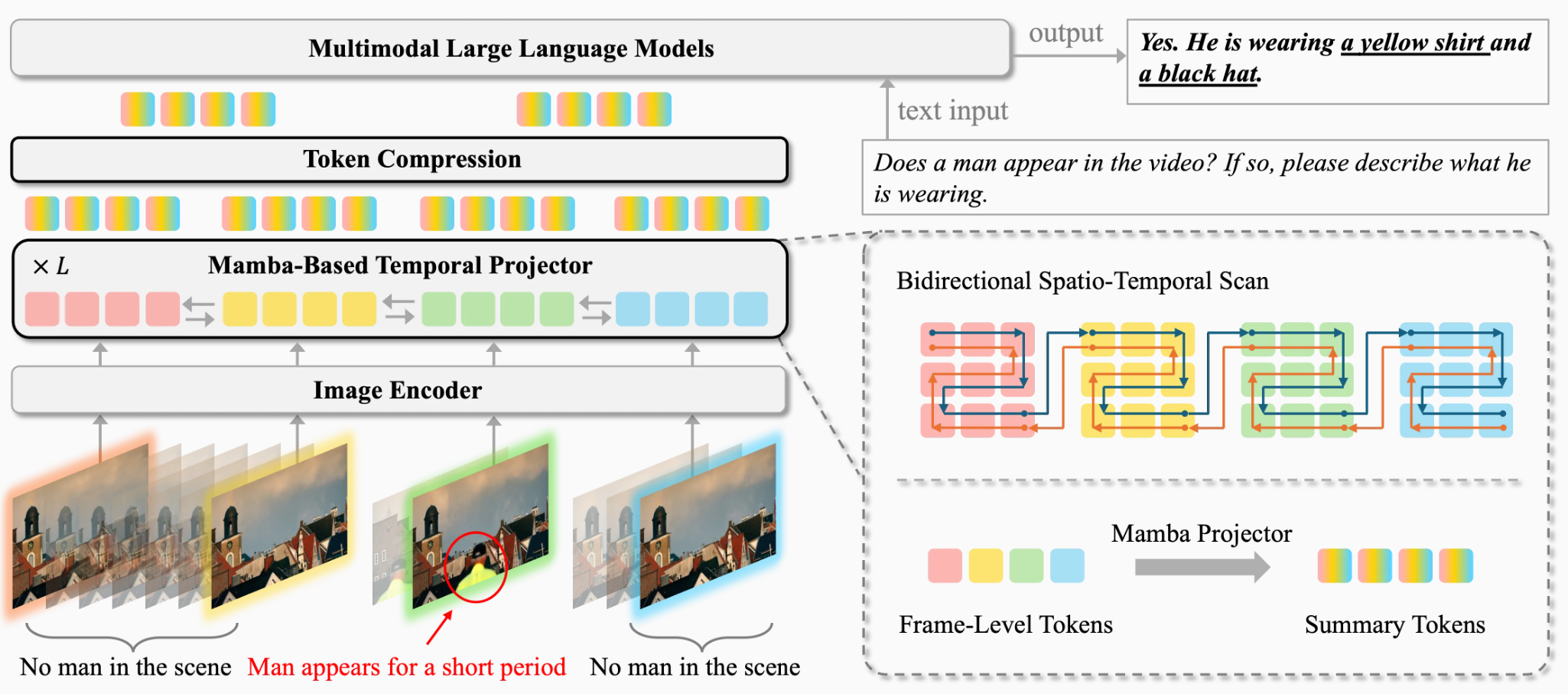

Token-Efficient Long Video Understanding for Multimodal LLMs Jindong Jiang, Xiuyu Li, Zhijian Liu, Muyang Li, Guo Chen, Zhiqi Li, De-An Huang, Guilin Liu, Zhiding Yu, Kurt Keutzer, Sungjin Ahn, Jan Kautz, Hongxu Yin, Yao Lu, Song Han, Wonmin Byeon arxiv 2025 Paper Project |

|

DiffiT: Diffusion Vision Transformers for Image Generation Ali Hatamizadeh, Jiaming Song, Guilin Liu, Jan Kautz, Arash Vahdat ECCV 2024 Paper . |

|

PYOCO: Preserve Your Own Correlation: A Noise Prior for Video Diffusion Models Songwei Ge, Seungjun Nah, Guilin Liu, Tyler Poon, Andrew Tao, Bryan Catanzaro, David Jacobs, Jia-Bin Huang, Ming-Yu Liu, Yogesh Balaji ICCV 2023 Paper Project Page. |

|

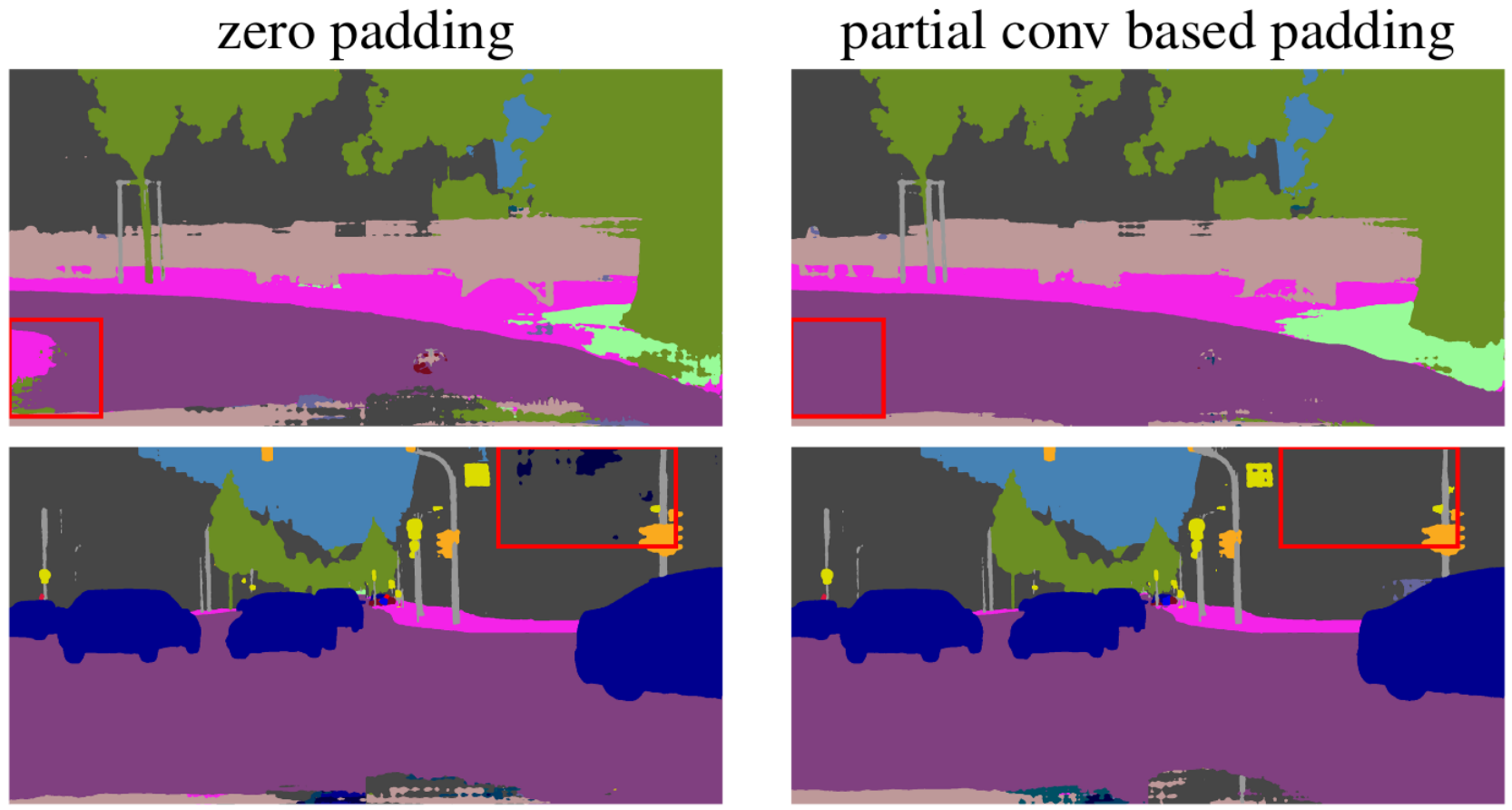

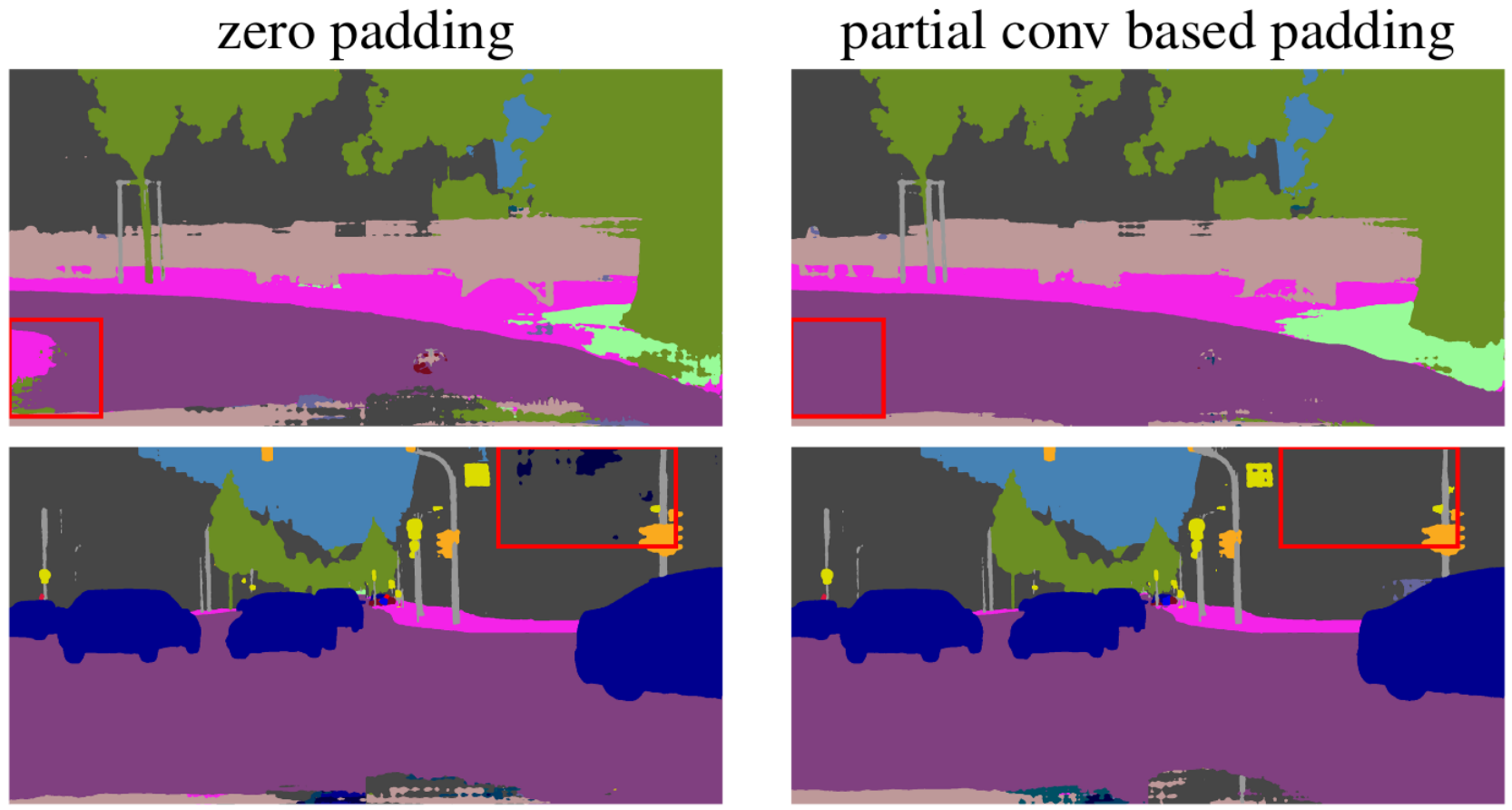

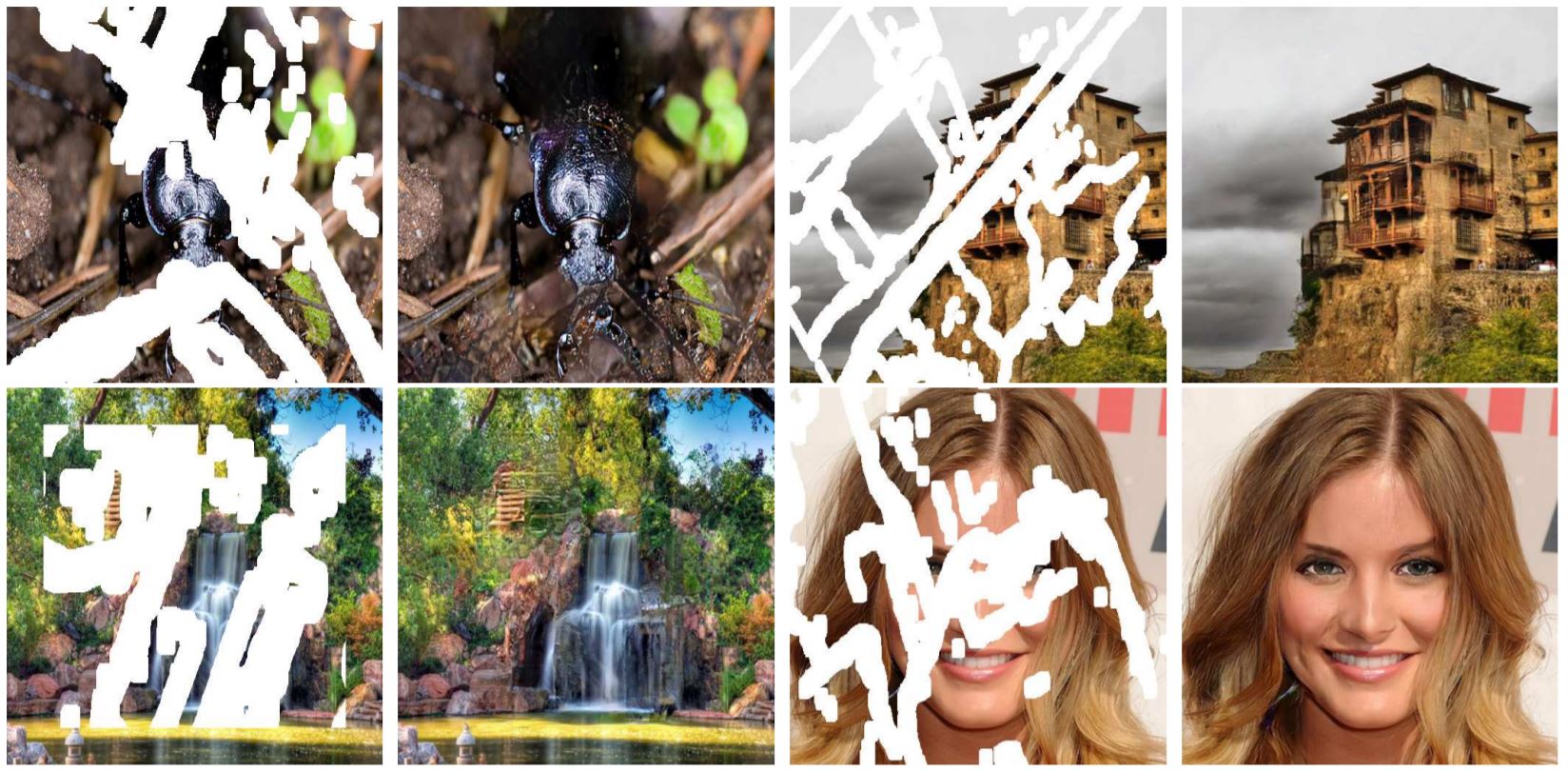

Partial Convolution for Padding, Inpainting, and Image Synthesis Guilin Liu*, Aysegul Dundar*, Kevin J. Shih, Ting-Chun Wang, Fitsum A. Reda, Karan Sapra, Zhiding Yu, Xiaodong Yang, Andrew Tao, Bryan Catanzaro (*equal contribution) T-PAMI 2022 Paper |

|

Coupled Segmentation and Edge Learning via Dynamic Graph Propagation Zhiding Yu, Rui Huang, Wonmin Byeon, Sifei Liu, Guilin Liu, Thomas Breuel, Anima Anandkumar, Jan Kautz NeurIPS 2021 Paper |

|

Dual Contrastive Loss and Attention for GANs Ning Yu, Guilin Liu, Aysegul Dundar, Andrew Tao, Bryan Catanzaro, Larry Davis, and Mario Fritz ICCV 2021 Paper |

|

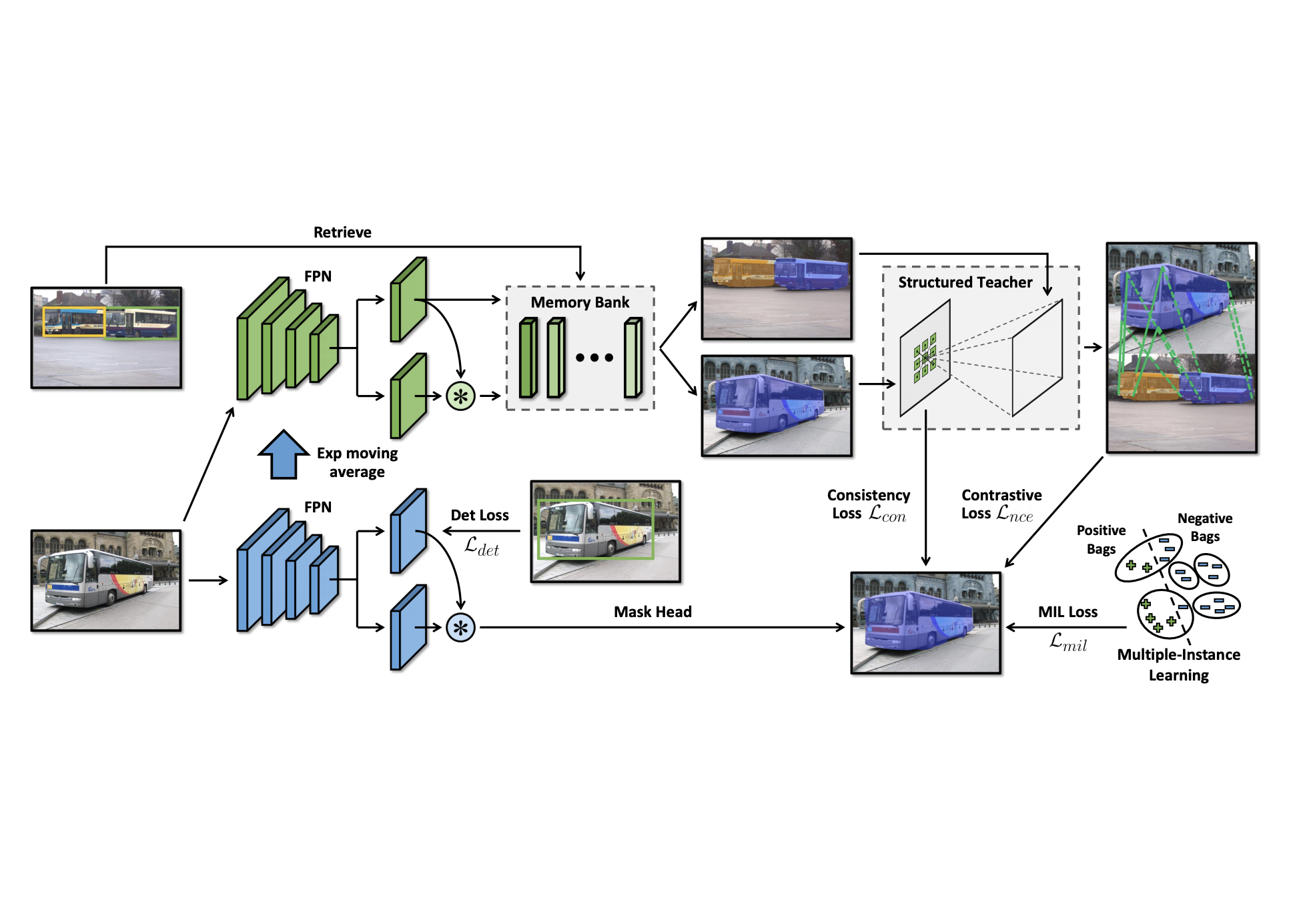

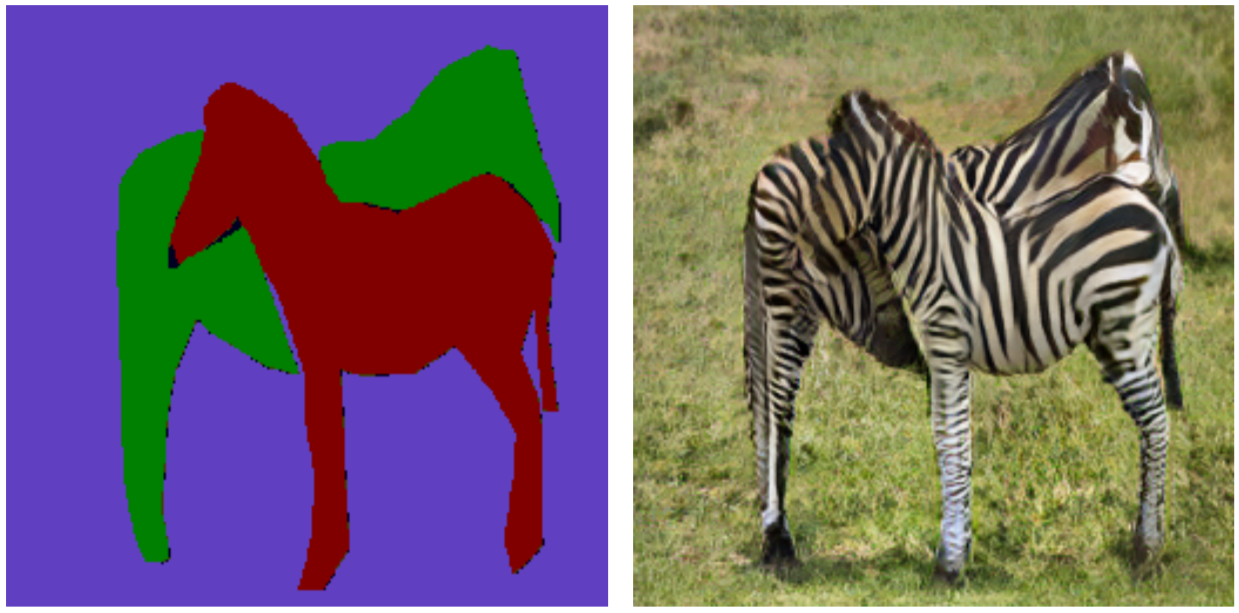

Discobox: Weakly supervised instance segmentation and semantic correspondence from box supervision Shiyi Lan, Zhiding Yu, Christopher Choy, Subhashree Radhakrishnan, Guilin Liu, Yuke Zhu, Larry S Davis, Anima Anandkumar ICCV 2021 Paper Code |

|

View Generalization for Single Image Textured 3D Models Anand Bhattad, Aysegul Dundar, Guilin Liu, Andrew Tao, Bryan Catanzaro CVPR 2021 Paper Web-page |

|

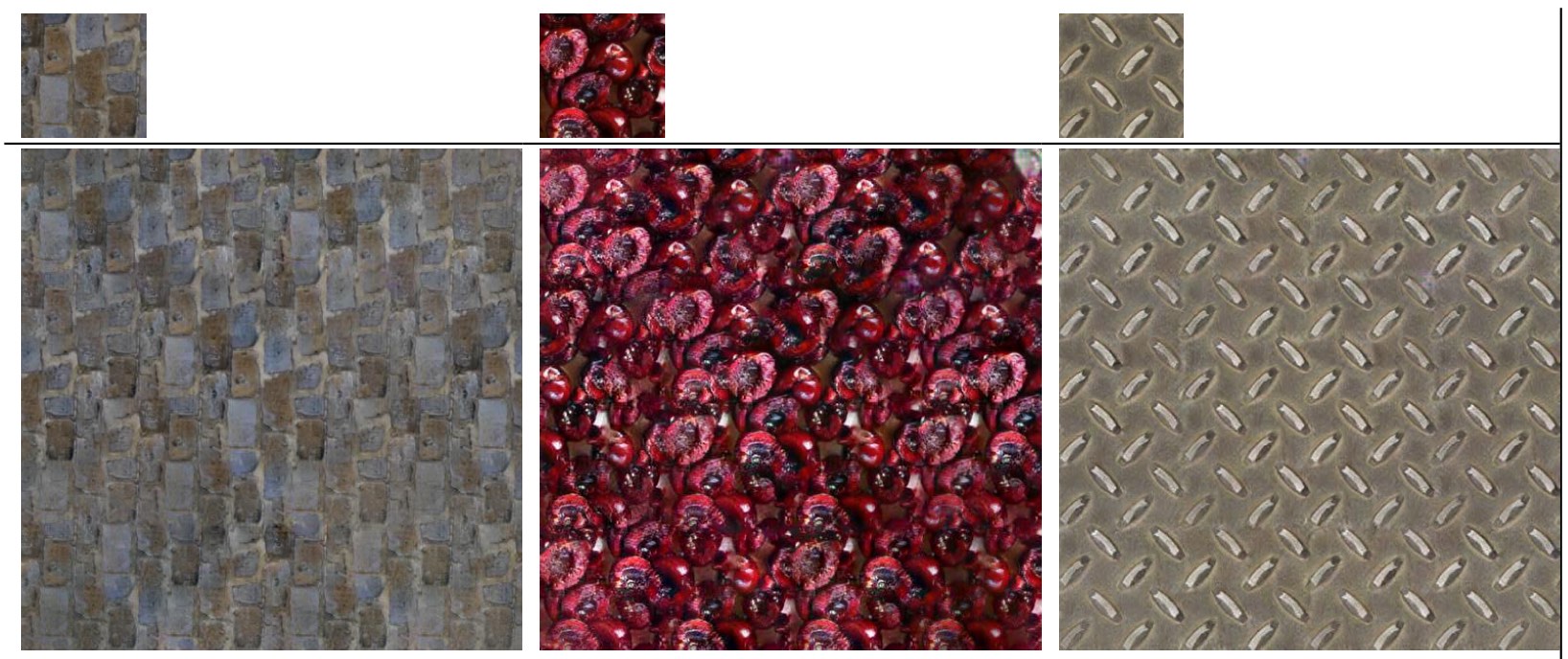

Neural FFTs for Universal Texture Image Synthesis Morteza Mardani*, Guilin Liu*, Aysegul Dundar, Shiqiu Liu, Andrew Tao, Bryan Catanzaro (*equal contribution) NeurIPS 2020 Paper |

|

Transposer: Universal Texture Synthesis Using Feature Maps as Transposed Convolution Filter Guilin Liu, Rohan Taori, Ting-Chun Wang, Zhiding Yu, Shiqiu Liu, Fitsum A. Reda, Karan Sapra, Andrew Tao, Bryan Catanzaro arxiv preprint Paper 1 min video 6 min video |

|

Panoptic-based Image Synthesis Aysegul Dundar, Karan Sapra, Guilin Liu, Andrew Tao, Bryan Catanzaro CVPR 2020 Paper |

|

Partial Convolution based Padding Guilin Liu, Kevin J. Shih, Ting-Chun Wang, Fitsum A. Reda, Karan Sapra, Zhiding Yu, Xiaodong Yang, Andrew Tao, Bryan Catanzaro arXiv preprint Paper Code |

|

Few-Shot Video-to-Video Synthesis Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz, Bryan Catanzaro NeurIPS 2019 Project Paper Github Youtube |

|

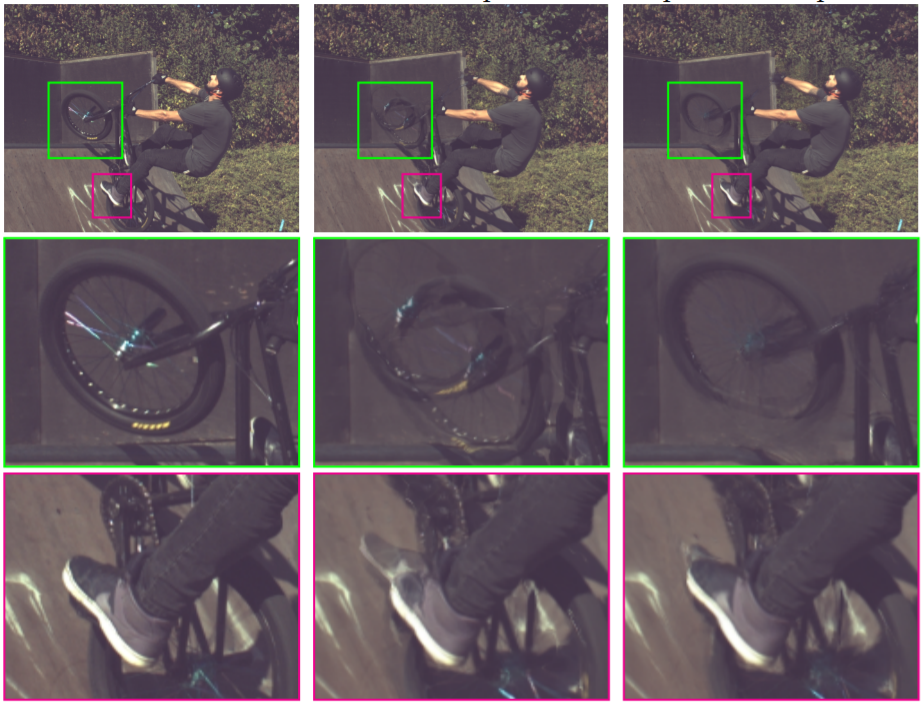

Unsupervised Video Interpolation Using Cycle Consistency Fitsum A Reda, Deqing Sun, Aysegul Dundar, Mohammad Shoeybi, Guilin Liu, Kevin J Shih, Andrew Tao, Jan Kautz, Bryan Catanzaro ICCV 2019 Paper |

|

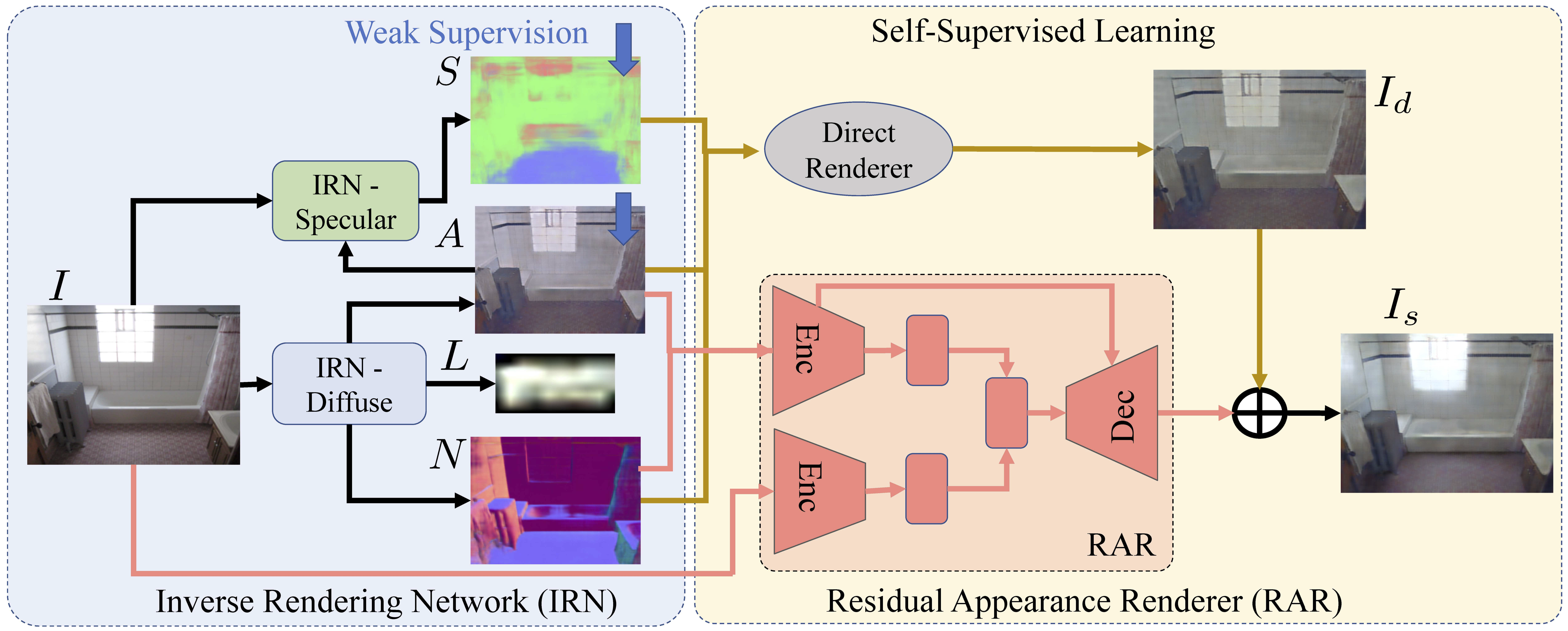

Neural Inverse Rendering of an Indoor Scene from a Single Image Soumyadip Sengupta, Jinwei Gu, Kihwan Kim, Guilin Liu, David Jacobs, Jan Kautz ICCV 2019 Paper |

|

Image Inpainting for Irregular Holes Using Partial Convolutions Guilin Liu, Fitsum A. Reda, Kevin J. Shih, Ting-Chun Wang, Andrew Tao, Bryan Catanzaro ECCV 2018 Paper Project Video Fortune Forbes GTC Keynote Live Demo with NVIDIA CEO Jensen Huang |

|

Video-to-Video Synthesis Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, Bryan Catanzaro NeurIPS 2018 Paper Project Video Arxiv Code |

|

SDC-Net: Video prediction using spatially-displaced convolution Fitsum A. Reda, Guilin Liu, Kevin J. Shih, Robert Kirby, Jon Barker, David Tarjan, Andrew Tao, Bryan Catanzaro ECCV 2018 Paper |

|

Material Editing Using a Physically Based Rendering Network Guilin Liu, Duygu Ceylan, Ersin Yumer, Jimei Yang, Jyh-Ming Lien ICCV 2017 Paper Project Data |

|

Symmetry-aware Depth Estimation using Deep Neural Networks Guilin Liu, Chao Yang, Zimo Li, Duygu Ceylan, Qixing Huang arxiv 2016 Arxiv |

|

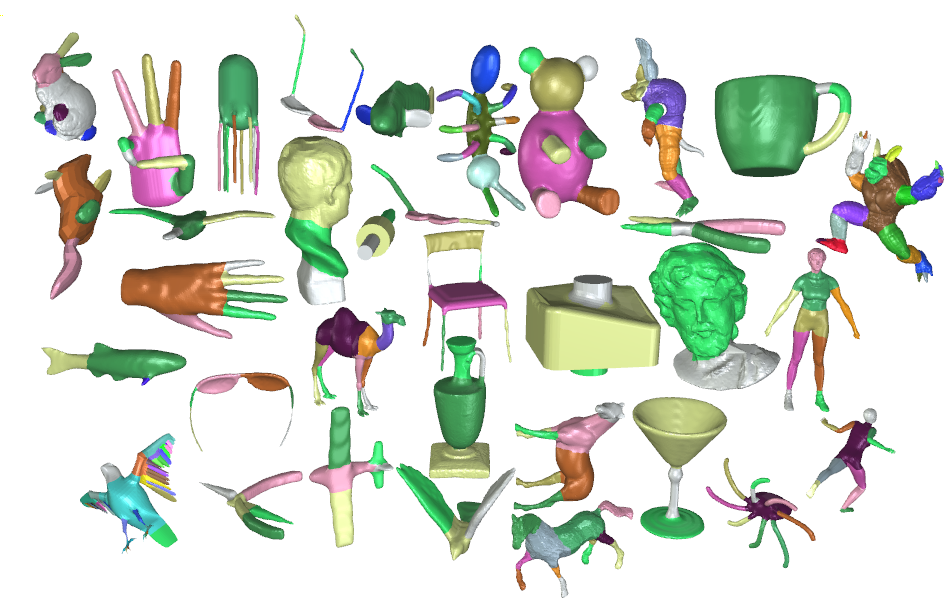

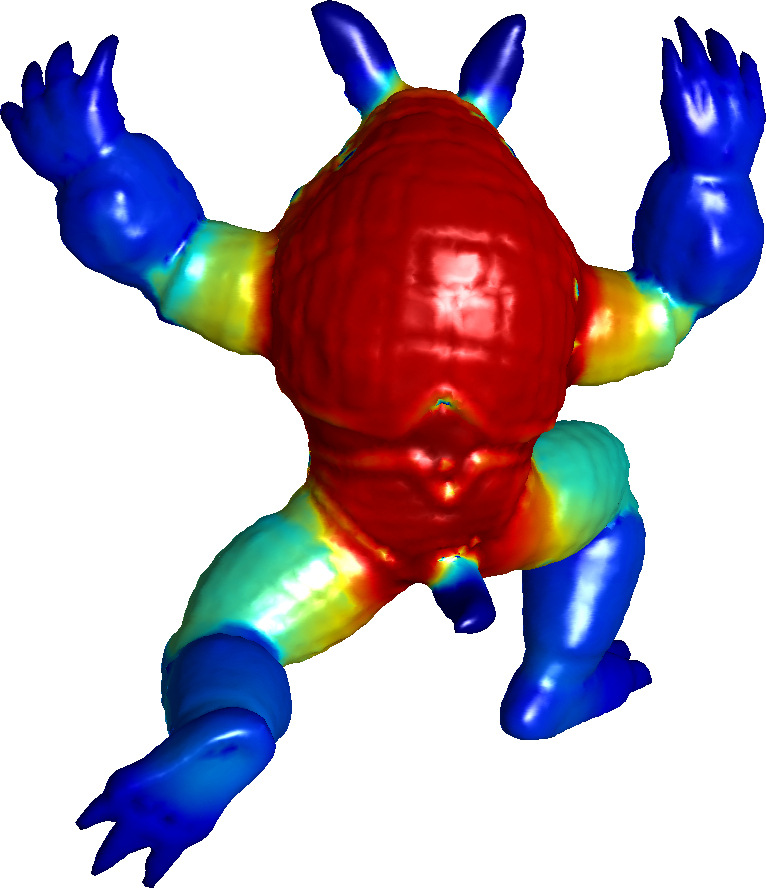

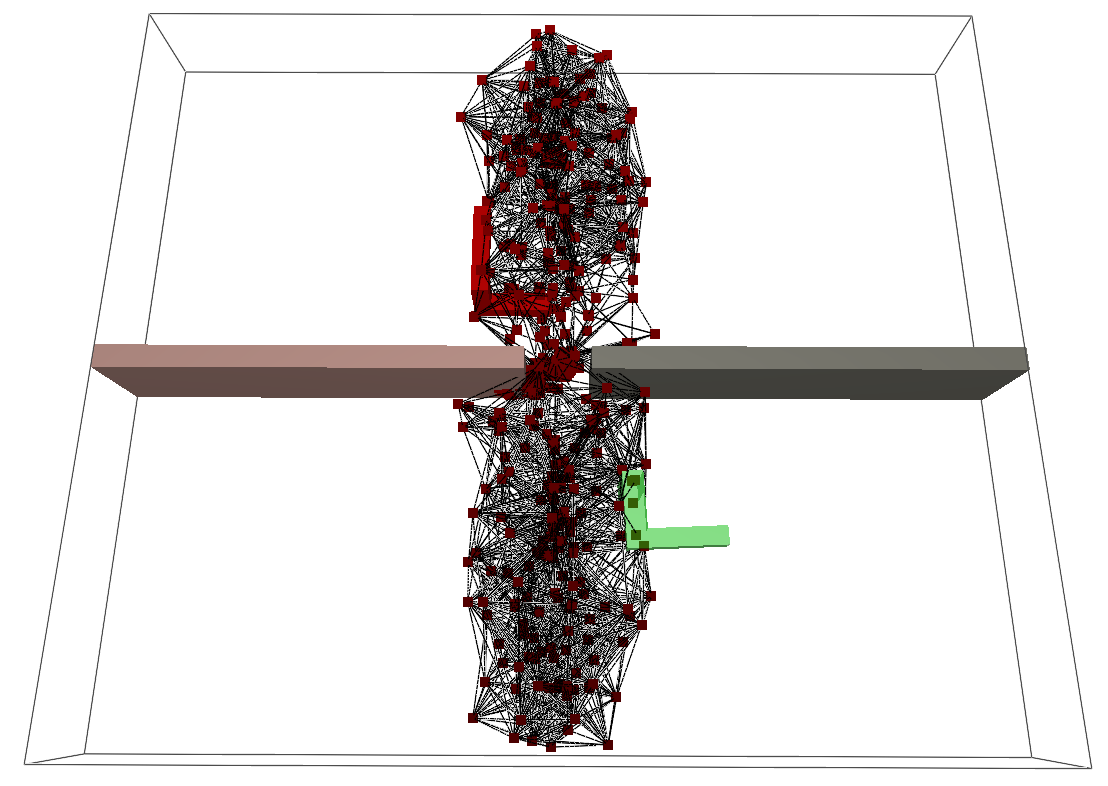

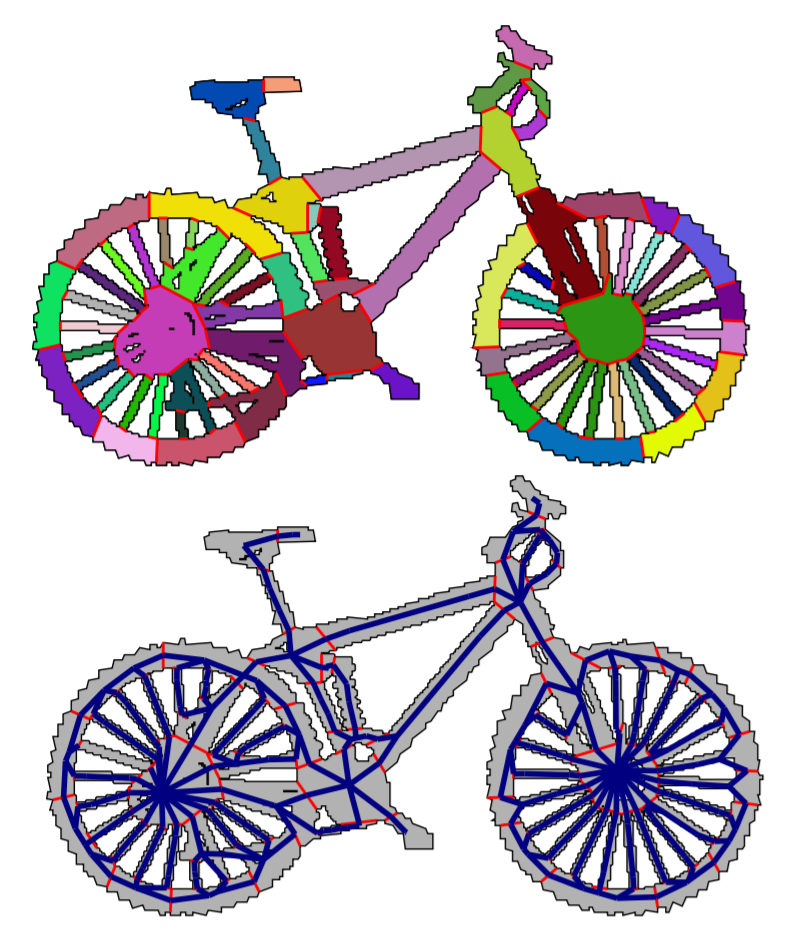

Nearly Convex Segmentation of Polyhedra Through Convex Ridge Separation Guilin Liu, Zhonghua Xi, Jyh-Ming Lien SPM 2016, also in Journal of Computer-Aided Design Paper Project Video |

|

Continuous Visibility Feature Guilin Liu, Zhonghua Xi, Jyh-Ming Lien CVPR 2015 Paper Project Code |

|

Fast Medial Axis Approximation via Max-Margin Pushing Guilin Liu, Jyh-Ming Lien IROS 2015 Paper Project Video |

|

Dual-Space Decomposition of 2D Complex Shapes Guilin Liu, Zhonghua Xi, Jyh-Ming Lien CVPR 2014 Paper Project Code |